The sys admin's daily grind: Varnish

Slow and Steady

Columnist Charly gives Apache a slick coat of Varnish for better performance.

One still hears the saying, "Salad tastes much better if you replace it with a juicy steak shortly before you dine," although the humor is debatable. Admins are given tips in the same vein: "Apache delivers static content much faster if you replace it with Nginx shortly before the launch." The saying may not faze vegetarians, but it can cause a stir among server admins, including me.

I've just been assigned the task of tweaking Apache 2.2, which outputs almost only static content, for maximum performance. For several reasons, I can't just send the old guy into the desert. Apache is undisputed in terms of flexibility; however, when it comes to performance, it doesn't even bother to take off in pursuit of its rivals.

If I'm not allowed to ditch Apache, I would at least like to put it on a faster steed, such as a fast cache like Varnish [1]. The makers of Varnish designed it from scratch as a caching instance, and its capabilities are accordingly powerful. I could create complex rules in the specially provided Varnish Cache Language (VCL), but for buffering a static website, all I really need is a lean configuration. To begin, I reroute Apache using NameVirtualHost and Lists so that it listens on port 8080 instead of port 80 in future; Varnish can then take over on port 80, and its cache can pass on rich content directly to the client.

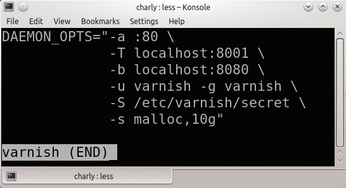

Most distributions include Varnish. Debian and its derivatives configure Varnish in /etc/default/varnish, whereas Red Hat and its relatives use /etc/sysconfig/varnish. I just need to adjust the command-line parameters for the call (DAEMON_OPTS). Figure 1 shows the configuration I use for my Apache accelerator; -a :80 specifies that Varnish accepts connections to port 80 on all IPv4 and IPv6 addresses. The -b parameter tells Varnish where Apache has set up camp.

A running Varnish can be controlled via a Telnet interface whose address and port are specified with the -T option. For security reasons, I only open the port on localhost. Additionally, I must know the secret, which is stored in the file for the -S parameter. The -u and -g parameters define the username and group membership, as you might expect.

Cache and Carry

The last line, with the -s parameter, is the most important one in terms of performance, because it controls the type and size of the cache. I can create the cache in RAM or on a disk partition. In this case, I type:

file, /var/cache/varnish.cache,50%

Varnish then uses up to half of the disk space below /var/cache. The tool occupies the space immediately, but the cache file only grows gradually.

Cold Turkey

Still, no one should expect miracles from a disk cache. My server fortunately has 16GB of RAM: Apache and Varnish consume some of this, but I can afford to hand over 10GB to the cache. If you try to skimp here, Varnish detours into swap space, and the speed advantage is gone. Thus, with -s malloc,10g, my legacy Apache can gallop off happily into the sunset.

Infos

- Varnish: http://www.varnish-cache.org