Of lakes and sparks – How Hadoop 2 got it right

Misconceptions

Hadoop version 2 has transitioned from an application to a Big Data platform. Reports of its demise are premature at best.

In a recent story on the PCWorld website titled "Hadoop successor sparks a data analysis evolution," the author predicts that Apache Spark will supplant Hadoop in 2015 for Big Data processing [1]. The article is so full of mis- (or dis-)information that it really is a disservice to the industry. To provide an accurate picture of Spark and Hadoop, several topics need to be explored in detail.

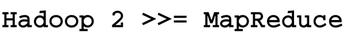

First, like any article on "Big Data," is it important to define exactly what you are talking about. The term "Big Data" is a marketing buzz-phase that has as much meaning as things like "Tall Mountain" or "Fast Car." Second, the concept of the data lake (less of a buzz-phrase and more descriptive than Big Data) needs to be defined. Third, Hadoop version 2 is more than a MapReduce engine. Indeed, if there is anything to take away from this article it is the message in Figure 1. And, finally, how Apache Spark neatly fits into the Hadoop ecosystem will be explained.

[...]

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Fedora Continues 32-Bit Support

In a move that should come as a relief to some portions of the Linux community, Fedora will continue supporting 32-bit architecture.

-

Linux Kernel 6.17 Drops bcachefs

After a clash over some late fixes and disagreements between bcachefs's lead developer and Linus Torvalds, bachefs is out.

-

ONLYOFFICE v9 Embraces AI

Like nearly all office suites on the market (except LibreOffice), ONLYOFFICE has decided to go the AI route.

-

Two Local Privilege Escalation Flaws Discovered in Linux

Qualys researchers have discovered two local privilege escalation vulnerabilities that allow hackers to gain root privileges on major Linux distributions.

-

New TUXEDO InfinityBook Pro Powered by AMD Ryzen AI 300

The TUXEDO InfinityBook Pro 14 Gen10 offers serious power that is ready for your business, development, or entertainment needs.

-

Danish Ministry of Digital Affairs Transitions to Linux

Another major organization has decided to kick Microsoft Windows and Office to the curb in favor of Linux.

-

Linux Mint 20 Reaches EOL

With Linux Mint 20 at its end of life, the time has arrived to upgrade to Linux Mint 22.

-

TuxCare Announces Support for AlmaLinux 9.2

Thanks to TuxCare, AlmaLinux 9.2 (and soon version 9.6) now enjoys years of ongoing patching and compliance.

-

Go-Based Botnet Attacking IoT Devices

Using an SSH credential brute-force attack, the Go-based PumaBot is exploiting IoT devices everywhere.

-

Plasma 6.5 Promises Better Memory Optimization

With the stable Plasma 6.4 on the horizon, KDE has a few new tricks up its sleeve for Plasma 6.5.