Advanced Bash techniques for automation, optimization, and security

Subshells and Process Substitution

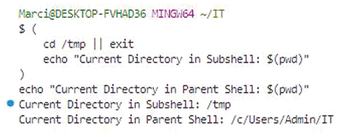

Subshells in Bash allow you to run commands in a separate execution environment, making them ideal for isolating variables or capturing command outputs. A common use case is encapsulating logic to prevent side effects (Listing 3).

Listing 3

Subshell

( cd /tmp || exit echo "Current Directory in Subshell: $(pwd)" ) echo "Current Directory in Parent Shell: $(pwd)"

Here, changes made in the subshell, such as switching directories, do not affect the parent shell (Figure 2). This isolation is particularly useful in complex scripts where you want to maintain a clean environment.

Figure 2: The first echo will show /tmp as the current directory (inside the subshell). The second echo will show your original directory (parent shell).

Figure 2: The first echo will show /tmp as the current directory (inside the subshell). The second echo will show your original directory (parent shell).

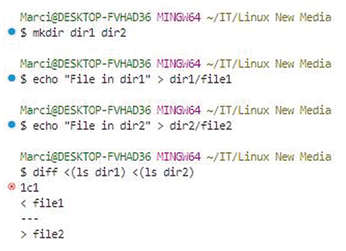

Process substitution is another powerful Bash feature that allows you to treat the output of a command as if it were a file. This capability enables seamless integration of commands that expect file inputs:

diff <(ls /dir1) <(ls /dir2)

The ls commands generate directory listings, which diff compares as if they were regular files (Figure 3). Process substitution enhances script efficiency by avoiding the need for intermediate temporary files.

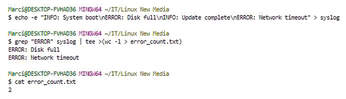

For scenarios involving data pipelines, process substitution can be combined with tee to simultaneously capture and process output:

grep "ERROR" /var/log/syslog | tee >(wc -l > error_count.txt)

This command filters error messages from a logfile, then tee allows you to count and log the errors simultaneously, demonstrating both flexibility and efficiency (Figure 4).

Figure 4: Find lines containing "ERROR" and check the terminal for the error messages (output from grep).

Figure 4: Find lines containing "ERROR" and check the terminal for the error messages (output from grep).

Scripting for Automation

Automation is at the heart of managing complex Linux environments, where tasks like parsing logs, updating systems, and handling backups must be executed reliably and efficiently. Shell scripting provides the flexibility to streamline these operations, ensuring consistency, scalability, and security. In this part, I will explore practical examples of dynamic logfile parsing, automated system updates, and efficient backup management, with a focus on real-world applications that IT professionals encounter in their daily workflows.

Logfile Parsing and Data Extraction

Logs are invaluable for monitoring system health, diagnosing issues, and maintaining compliance. However, manually analyzing logfiles in production environments is both impractical and error-prone. Shell scripts can dynamically parse logs to extract relevant data, highlight patterns, and even trigger alerts for specific conditions.

Consider the example of extracting error messages from a system log (/var/log/syslog) and generating a summary report. A script can achieve this dynamically (Listing 4).

Listing 4

Log Summary

#!/bin/bash

log_file="/var/log/syslog"

output_file="/var/log/error_summary.log"

# Check if log file exists

if [[ ! -f $log_file ]]; then

echo "Error: Log file $log_file does not exist."

exit 1

fi

# Extract error entries and count occurrences

grep -i "error" "$log_file" | awk '{print $1, $2, $3, $NF}' | sort | uniq -c > "$output_file"

echo "Error summary generated in $output_file"

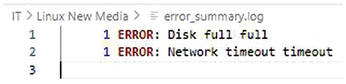

This script checks for the presence of the logfile, extracts lines containing "error," processes them with awk to focus on specific fields (like timestamps and error codes), and generates a summarized output (Figure 5). The use of sort and uniq ensures that recurring errors are grouped and counted. This approach can be extended to handle different log formats or integrate with tools like jq for JSON-based logs.

In a cloud environment, similar scripts can be used to parse logs from multiple instances via SSH or integrated with centralized logging systems like Elastic Stack.

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Introducing matrixOS, an Immutable Gentoo-Based Linux Distro

It was only a matter of time before a developer decided one of the most challenging Linux distributions needed to be immutable.

-

Chaos Comes to KDE in KaOS

KaOS devs are making a major change to the distribution, and it all comes down to one system.

-

New Linux Botnet Discovered

The SSHStalker botnet uses IRC C2 to control systems via legacy Linux kernel exploits.

-

The Next Linux Kernel Turns 7.0

Linus Torvalds has announced that after Linux kernel 6.19, we'll finally reach the 7.0 iteration stage.

-

Linux From Scratch Drops SysVinit Support

LFS will no longer support SysVinit.

-

LibreOffice 26.2 Now Available

With new features, improvements, and bug fixes, LibreOffice 26.2 delivers a modern, polished office suite without compromise.

-

Linux Kernel Project Releases Project Continuity Document

What happens to Linux when there's no Linus? It's a question many of us have asked over the years, and it seems it's also on the minds of the Linux kernel project.

-

Mecha Systems Introduces Linux Handheld

Mecha Systems has revealed its Mecha Comet, a new handheld computer powered by – you guessed it – Linux.

-

MX Linux 25.1 Features Dual Init System ISO

The latest release of MX Linux caters to lovers of two different init systems and even offers instructions on how to transition.

-

Photoshop on Linux?

A developer has patched Wine so that it'll run specific versions of Photoshop that depend on Adobe Creative Cloud.