Quantum computers and the quest for quantum-resilient encryption

Quantum-Resilient Alternatives

Production-ready quantum computers are still several years away. Stop-gap measures such as increasing key lengths and tweaking handshake procedures might work for a while, but eventually, the world will need a whole new class of encryption methods.

Today's security protocols are usually optimized for a specific procedure (e.g., a specific key exchange such as the Diffie-Hellman key exchange). Replacing this procedure was never intended; therefore, the protocol was not modularized accordingly. Designing the protocol around the encryption improves the efficiency of communication, and it also implicitly rules out certain attacks; however, the structure of the legacy protocol might complicate your efforts to migrate to a new encryption method.

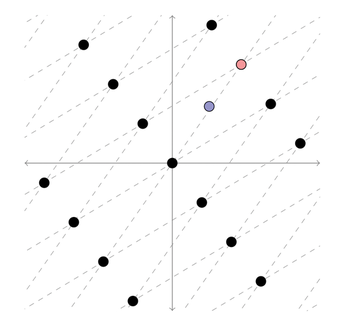

Cryptographers are exploring several potential techniques for quantum-resilient encryption. One promising alternative is based on the concept of lattices. In a two-dimensional grid (Figure 2), it is comparatively easy to see which grid point (red) an arbitrarily set point (purple) is closest to. In a multidimensional grid, this becomes computationally very difficult. If the grid points symbolize all possible messages, a shifted point could represent the encrypted message. The receiver, who has precise knowledge of the grid, will find the next point with comparative ease. An attacker without sufficient information would have a hard time. Therefore, grids enable efficient encryption and signature procedures. Although it is very difficult to find reliable parameters, it is believed that this type of procedure is one of the most promising.

Figure 2: Multidimensional lattices can serve as the starting point of quantum-resilient encryption schemes.

Figure 2: Multidimensional lattices can serve as the starting point of quantum-resilient encryption schemes.

A quantum-resilient encryption method could also be built around error-correcting codes. McEliece's cryptosystem, for example, has been known since 1978 and remains unbroken since then. However, the McEliece technique requires the exchange of a public key that weighs in at around 1MB, which has made the cryptosystem unattractive thus far. Other better alternatives exist for creating signatures: Hash-based signatures are already well understood and ready for use. One interesting area is isogenies [4] on supersingular elliptic curves [5] (not to be confused with classical cryptography based on elliptic curves). However, research on this approach has only been going for a few years, and so many questions still remain.

Some experts point to the benefits of a hybrid approach that would incorporate multiple procedures: If several of the procedures are used in parallel, the security of the overall system is based on all the procedures used, which spreads the risk if one of the methods is broken. In this context, experts speak of crypto-agility, a term that encompasses easy sharing of procedures, an easy way to respond to security incidents, and appropriate mitigation of incidents.

This hybrid approach seems relatively easy to implement for a task such as software updates. The update mechanism is modified such that, in addition to checking a classical signature, it also checks a second, quantum-resilient signature. The overhead is then limited to the additional signatures and verification times.

VPNs

VPNs are an important part of today's networks, and encryption is essential to the privacy provided by a VPN. The protocols most commonly used with VPNs are not well prepared for attacks by quantum computers, and even some of the quantum-resilient alternatives are not well suited to use in VPNs. For example, the 1MB public key required by the quantum-resilient McEliece's method is 2,000 times bigger than what is currently required with today's protocols. This also affects software development – a programmer cannot allocate an arbitrary amount of memory on a system. In other words, software that was written for the data sizes that are common today (or in the past) may now need to be adapted for the new methods.

In the case of the IPsec VPN protocol, there is broad consensus that – as long as no suitably powerful quantum computer exists – you merely need to add methods that are quantum-resilient, but you do not yet need to replace the classical methods. Based on this approach, initial solutions have already been discussed for the Internet Key Exchange v2 (IKEv2) protocol, which is widespread in IPsec and laid down in the form of two Internet drafts currently under review. These drafts specify how additional messages can be exchanged in the protocol: The keys of most quantum-resilient alternatives turn out to be too large to be exchanged together with the underlying Diffie-Hellman exchange in a message without exceeding the maximum size of the initial message. (The maximum size is not just determined by the protocol but is also dependent on external parameters of the network, such as the Maximum Transmission Unit or MTU.)

Therefore, between the initial handshake, from which the connection is secured using a session key derived from Diffie-Hellman for AES, and the authentication step, you need to insert several messages for additional key negotiations. With the help of a quantum-resilient method (or several), additional secrets could be exchanged and the symmetric key could be updated. The connection would then be secured using at least two methods, which would also make it quantum-resilient.

But even that doesn't solve all the problems. The maximum size of an IKEv2 message is 64KB – too small for some procedures, including some McEliece configurations. Therefore, the key itself would have to be distributed over several messages and reassembled at the recipient's end by means of an internal counter. Possible solutions to this problem are currently in progress, but their security has not yet been conclusively demonstrated.

Quantum-resilient encryption poses not only protocol-related questions, but also methodological ones. The Diffie-Hellman key exchange, which is used in almost all common protocols today, lets both communication partners participate equally and with equal communication overhead in the process of creating the final symmetric key. This is not always the case with key-encapsulation mechanisms (KEMs), which are common in the field of post-quantum cryptography, because here the key is transmitted from one communication partner to the other. For McEliece, this means, for example, that a great deal of data travels in one direction and very little in the other. This relationship, which is unusual for the previous protocols, might be exploitable by attackers even without a quantum computer. Similarly, it is important to clarify how best to achieve properties such as forward secrecy using KEMs in protocols or use cases.

Note that these changes are occurring with protocols that were deliberately kept simple to eliminate or mitigate the effects of a few specific attacks. Implementing hybrid or cryptoagile solutions dials up the complexity, which could make the protocol susceptible to other sorts of attacks, such as denial-of-service attacks. It would therefore be important for the developer to provide other safeguards, such as checking to see if an attacker is trying to fill the receiver's memory with unnecessary data disguised as key material.

Next Steps

To enable adaptation of the existing infrastructure with all its protocols and the software on a wide variety of systems, standardization bodies are already addressing the problem. The challenge is to find a consensus on how to extend the protocols to communicate both practically and securely in the future. New solutions are needed in some cases, especially since the focus is now on the modularity of the mechanisms and hybrid application of the procedures.

The problem can definitely be solved. The US National Institute of Standards and Technology (NIST) is currently elaborating a standardization process [6]. Organizations like the German Federal Office for Information Security (BSI) advise migration and recommend quantum-resistant algorithms. However, it will probably be years before the standards are adopted and established in practice. For highly sensitive use cases, if you wait for the standard, you might already be too late.

« Previous 1 2 3 Next »

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

The Next Linux Kernel Turns 7.0

Linus Torvalds has announced that after Linux kernel 6.19, we'll finally reach the 7.0 iteration stage.

-

Linux From Scratch Drops SysVinit Support

LFS will no longer support SysVinit.

-

LibreOffice 26.2 Now Available

With new features, improvements, and bug fixes, LibreOffice 26.2 delivers a modern, polished office suite without compromise.

-

Linux Kernel Project Releases Project Continuity Document

What happens to Linux when there's no Linus? It's a question many of us have asked over the years, and it seems it's also on the minds of the Linux kernel project.

-

Mecha Systems Introduces Linux Handheld

Mecha Systems has revealed its Mecha Comet, a new handheld computer powered by – you guessed it – Linux.

-

MX Linux 25.1 Features Dual Init System ISO

The latest release of MX Linux caters to lovers of two different init systems and even offers instructions on how to transition.

-

Photoshop on Linux?

A developer has patched Wine so that it'll run specific versions of Photoshop that depend on Adobe Creative Cloud.

-

Linux Mint 22.3 Now Available with New Tools

Linux Mint 22.3 has been released with a pair of new tools for system admins and some pretty cool new features.

-

New Linux Malware Targets Cloud-Based Linux Installations

VoidLink, a new Linux malware, should be of real concern because of its stealth and customization.

-

Say Goodbye to Middle-Mouse Paste

Both Gnome and Firefox have proposed getting rid of a long-time favorite Linux feature.