Software-defined networking puts an end to patchwork administration

Re-Envisioning the Network

Globalization, rapidly increasing numbers of devices, virtualization, the cloud, and "bring your own device" make classically organized IP networks difficult to plan and manage. Instead of quarreling, some admins address these problems with a radically new approach: Software-defined networking.

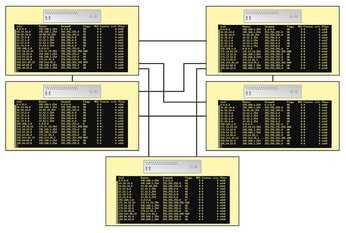

Traditional IP networks are made up of a number of autonomous systems: switches, routers, and firewalls. The network devices evaluate incoming data packets by checking their tables and route the packets appropriately. For this to work, these systems need to be familiar with the network topology (Figure 1). The position of a particular device on the network defines its function; a change of position causes complication and reconfiguration.

Figure 1: A traditional IP network consists of physical devices that store information in tables and communicate according to defined rules.

Figure 1: A traditional IP network consists of physical devices that store information in tables and communicate according to defined rules.

Over time, the Internet Engineering Task Force (IETF) has given administrators a series of protocols to help spice up the simple control logic. However, these extensions typically address only a specific task and are comparatively isolated. In addition, the growing number of these standards (in conjunction with vendor-specific optimizations and software dependencies) has bloated the complexity of today's network.

[...]

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Introducing matrixOS, an Immutable Gentoo-Based Linux Distro

It was only a matter of time before a developer decided one of the most challenging Linux distributions needed to be immutable.

-

Chaos Comes to KDE in KaOS

KaOS devs are making a major change to the distribution, and it all comes down to one system.

-

New Linux Botnet Discovered

The SSHStalker botnet uses IRC C2 to control systems via legacy Linux kernel exploits.

-

The Next Linux Kernel Turns 7.0

Linus Torvalds has announced that after Linux kernel 6.19, we'll finally reach the 7.0 iteration stage.

-

Linux From Scratch Drops SysVinit Support

LFS will no longer support SysVinit.

-

LibreOffice 26.2 Now Available

With new features, improvements, and bug fixes, LibreOffice 26.2 delivers a modern, polished office suite without compromise.

-

Linux Kernel Project Releases Project Continuity Document

What happens to Linux when there's no Linus? It's a question many of us have asked over the years, and it seems it's also on the minds of the Linux kernel project.

-

Mecha Systems Introduces Linux Handheld

Mecha Systems has revealed its Mecha Comet, a new handheld computer powered by – you guessed it – Linux.

-

MX Linux 25.1 Features Dual Init System ISO

The latest release of MX Linux caters to lovers of two different init systems and even offers instructions on how to transition.

-

Photoshop on Linux?

A developer has patched Wine so that it'll run specific versions of Photoshop that depend on Adobe Creative Cloud.