Calculating Probability

Perl – Think Again

To tackle mathematical problems with conditional probabilities, math buffs rely on Bayes' formula or discrete distributions, generated by short Perl scripts.

Features

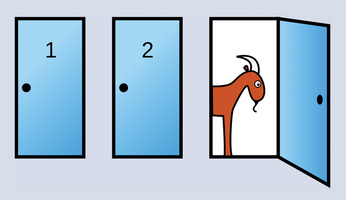

The Monty Hall problem is loved by statisticians around the world [1]. You might be familiar with this puzzle, in which a game show host offers a contestant a choice of three doors – behind one door is a prize, but the other two doors only reveal goats. After the contestant chooses a door, the TV host opens a different door, revealing a goat, and asks the candidate to reconsider (Figure 1). Who would have thought that probabilities in a static television studio could change so dramatically just because the host opens a door without a prize?

Figure 1: Hoping to win the car, the contestant chooses door 1. The host, who knows which door leads to the car, then opens door 3, revealing a goat. He offers the contestant the option of picking another door. Does it make sense for the contestant to change their mind and go for door 2? (Source: Wikipedia)

Figure 1: Hoping to win the car, the contestant chooses door 1. The host, who knows which door leads to the car, then opens door 3, revealing a goat. He offers the contestant the option of picking another door. Does it make sense for the contestant to change their mind and go for door 2? (Source: Wikipedia)

[...]

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Keep Android Open

Google has announced that, soon, anyone looking to develop Android apps will have to first register centrally with Google.

-

Kernel 7.0 Now in Testing

Linus Torvalds has announced the first Release Candidate (RC) for the 7.x kernel is available for those who want to test it.

-

Introducing matrixOS, an Immutable Gentoo-Based Linux Distro

It was only a matter of time before a developer decided one of the most challenging Linux distributions needed to be immutable.

-

Chaos Comes to KDE in KaOS

KaOS devs are making a major change to the distribution, and it all comes down to one system.

-

New Linux Botnet Discovered

The SSHStalker botnet uses IRC C2 to control systems via legacy Linux kernel exploits.

-

The Next Linux Kernel Turns 7.0

Linus Torvalds has announced that after Linux kernel 6.19, we'll finally reach the 7.0 iteration stage.

-

Linux From Scratch Drops SysVinit Support

LFS will no longer support SysVinit.

-

LibreOffice 26.2 Now Available

With new features, improvements, and bug fixes, LibreOffice 26.2 delivers a modern, polished office suite without compromise.

-

Linux Kernel Project Releases Project Continuity Document

What happens to Linux when there's no Linus? It's a question many of us have asked over the years, and it seems it's also on the minds of the Linux kernel project.

-

Mecha Systems Introduces Linux Handheld

Mecha Systems has revealed its Mecha Comet, a new handheld computer powered by – you guessed it – Linux.