Of lakes and sparks – How Hadoop 2 got it right

Misconceptions

Hadoop version 2 has transitioned from an application to a Big Data platform. Reports of its demise are premature at best.

In a recent story on the PCWorld website titled "Hadoop successor sparks a data analysis evolution," the author predicts that Apache Spark will supplant Hadoop in 2015 for Big Data processing [1]. The article is so full of mis- (or dis-)information that it really is a disservice to the industry. To provide an accurate picture of Spark and Hadoop, several topics need to be explored in detail.

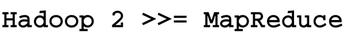

First, like any article on "Big Data," is it important to define exactly what you are talking about. The term "Big Data" is a marketing buzz-phase that has as much meaning as things like "Tall Mountain" or "Fast Car." Second, the concept of the data lake (less of a buzz-phrase and more descriptive than Big Data) needs to be defined. Third, Hadoop version 2 is more than a MapReduce engine. Indeed, if there is anything to take away from this article it is the message in Figure 1. And, finally, how Apache Spark neatly fits into the Hadoop ecosystem will be explained.

[...]

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Introducing matrixOS, an Immutable Gentoo-Based Linux Distro

It was only a matter of time before a developer decided one of the most challenging Linux distributions needed to be immutable.

-

Chaos Comes to KDE in KaOS

KaOS devs are making a major change to the distribution, and it all comes down to one system.

-

New Linux Botnet Discovered

The SSHStalker botnet uses IRC C2 to control systems via legacy Linux kernel exploits.

-

The Next Linux Kernel Turns 7.0

Linus Torvalds has announced that after Linux kernel 6.19, we'll finally reach the 7.0 iteration stage.

-

Linux From Scratch Drops SysVinit Support

LFS will no longer support SysVinit.

-

LibreOffice 26.2 Now Available

With new features, improvements, and bug fixes, LibreOffice 26.2 delivers a modern, polished office suite without compromise.

-

Linux Kernel Project Releases Project Continuity Document

What happens to Linux when there's no Linus? It's a question many of us have asked over the years, and it seems it's also on the minds of the Linux kernel project.

-

Mecha Systems Introduces Linux Handheld

Mecha Systems has revealed its Mecha Comet, a new handheld computer powered by – you guessed it – Linux.

-

MX Linux 25.1 Features Dual Init System ISO

The latest release of MX Linux caters to lovers of two different init systems and even offers instructions on how to transition.

-

Photoshop on Linux?

A developer has patched Wine so that it'll run specific versions of Photoshop that depend on Adobe Creative Cloud.