Bonding your network adapters for better performance

Together

© Photo by Andrew Moca on Unsplash

Combining your network adapters can speed up network performance – but a little more testing could lead to better choices.

I recently bought a used HP Z840 workstation to use as a server for a Proxmox [1] virtualization environment. The first virtual machine (VM) I added was an Ubuntu Server 22.04 LTS instance with nothing on it but the Cockpit [2] management tool and the WireGuard [3] VPN solution. I planned to use WireGuard to connect to my home network from anywhere, so that I can back up and retrieve files as needed and manage the other devices in my home lab. WireGuard also gives me the ability to use those sketchy WiFi networks that you find at cafes and in malls with less worry about someone snooping on my traffic.

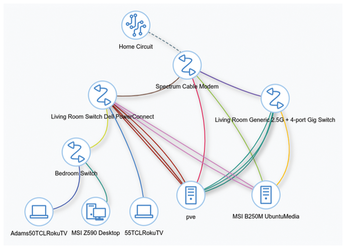

The Z840 has a total of seven network interface cards (NICs) installed: two on the motherboard and five more on two separate add-in cards. My second server with a backup WireGuard instance has 4 gigabit NICs in total. Figure 1 is a screenshot from NetBox that shows how everything is connected to my two switches and the ISP-supplied router for as much redundancy as I can get from a single home network connection.

[...]

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Introducing matrixOS, an Immutable Gentoo-Based Linux Distro

It was only a matter of time before a developer decided one of the most challenging Linux distributions needed to be immutable.

-

Chaos Comes to KDE in KaOS

KaOS devs are making a major change to the distribution, and it all comes down to one system.

-

New Linux Botnet Discovered

The SSHStalker botnet uses IRC C2 to control systems via legacy Linux kernel exploits.

-

The Next Linux Kernel Turns 7.0

Linus Torvalds has announced that after Linux kernel 6.19, we'll finally reach the 7.0 iteration stage.

-

Linux From Scratch Drops SysVinit Support

LFS will no longer support SysVinit.

-

LibreOffice 26.2 Now Available

With new features, improvements, and bug fixes, LibreOffice 26.2 delivers a modern, polished office suite without compromise.

-

Linux Kernel Project Releases Project Continuity Document

What happens to Linux when there's no Linus? It's a question many of us have asked over the years, and it seems it's also on the minds of the Linux kernel project.

-

Mecha Systems Introduces Linux Handheld

Mecha Systems has revealed its Mecha Comet, a new handheld computer powered by – you guessed it – Linux.

-

MX Linux 25.1 Features Dual Init System ISO

The latest release of MX Linux caters to lovers of two different init systems and even offers instructions on how to transition.

-

Photoshop on Linux?

A developer has patched Wine so that it'll run specific versions of Photoshop that depend on Adobe Creative Cloud.