Defragmenting Linux

Optimizing data organization on disk

ByDefragfs optimizes files on a system, allowing videos to load faster and large archives to open in the blink of an eye.

In this age of digital content, data collections in normal households are growing rapidly. Whereas just a few years ago, hard drives with a couple hundred gigabytes were perfectly sufficient, today, multiple-terabyte disk storage capacity is commonly used.

The Linux filesystems ext2, ext3, and ext4 don’t need that much attention, but over time, after innumerable writes and deletes, data becomes fragmented. This slows down not just the hard disk itself, but also the entire system – sometimes noticeably. Thus, power users, even on Linux, are advised to reorganize their data occasionally.

Fragmented

Fragmentation is something that primarily affects larger files that will not fit completely into the free space on a hard drive because of a lack of sufficiently large, contiguous space; thus, a file will reside in different segments (see the “Theory” box). When such a file is read, the read heads move to a new position several times to gather the pieces. This movement takes time and continues to increase on heavily fragmented disks.

User data isn’t the only contributor to fragmentation; the operating system itself encourages the big divide: Power users who like to experiment and often try out software also contribute to fragmentation by installing new programs and then deleting them.

Partitions on which more than half of the capacity is used will fragment data more heavily as the system needs to spread larger files over an increasing number of areas because of the lack of free space.

Ultimately, generally useful tools like BleachBit or Rpmorphan can aggravate the situation under certain circumstances by removing files that are no longer needed: Deletions between allocated segments generate non-contiguous free blocks.

Prevention

To minimize file defragmentation, common filesystems like ext2 and its successors ext3 and ext4 include some mechanisms to counteract the effect. For example, to attempt to copy data completely to contiguous free space on the hard disk, filesystems keep data to be written in RAM for a period of time until the final size is determined. Moreover, filesystems reserve free block groups on the hard disk to store growing files completely.

Even such forward-looking mechanisms cannot deal fully with the problem. As for many problems, Linux includes on-board solutions for which other operating systems require additional, and often expensive, software. Fragmentation is no exception, with Linux offering a number of ways to resolve the issue.

Before you start what could be a quite lengthy defragmentation run – depending on the size of your partition – you should first check to see whether the disk in question is affected by the problem at all. Low data throughput on a system does not necessarily indicate a fragmented disk. With the new AFD hard disks, in particular, an incorrectly configured partition can lead to significant loss of speed, even without fragmented data.

To determine the degree of fragmentation, first install the e2fsprogs package, if this was not already done during the initial configuration. The tools in this package provide important data on the ext2/3/4 filesystems.

Make sure you unmount the partition beforehand. You can do this, if needed, by using the command

$ umount <devicefile>

and running the following command with administrative privileges in a terminal:

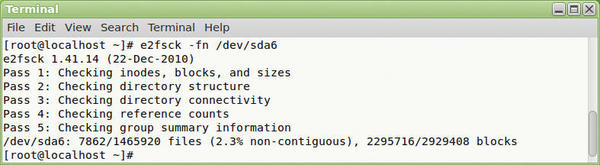

# e2fsck -fn <devicefile>

When you check the partition, you will see output that shows the percentage of non-contiguous blocks in the last line. Don’t let what might look like a large value scare you: Defragmenting is not worthwhile on Linux until the value increases to more than 20 percent of non-contiguous blocks (Figure 1).

If you want more precise details on the degree of fragmentation, you can alternatively use the command

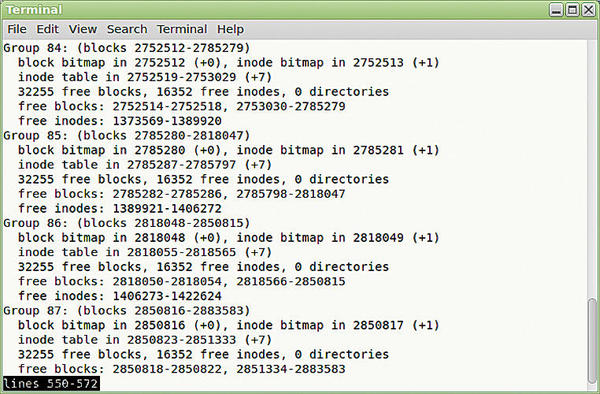

# dumpe2fs <devicefile>

for a detailed overview. The tool first lists information on the type of filesystem and then on the individual block groups and their data.

In the list of groups, take a closer look at the free blocks: line. If you only find one area here, everything is OK. But if you find several free blocks, the data contained in this group are partially fragmented. The more blocks the tool outputs, the more block groups this affects, and the more heavily the data on the partition are fragmented (Figure 2).

Large Files

Frequent saving and deleting of large files such as multimedia files often tears holes in the data structures. If the data of a high-resolution movie is spread across widely spaced sections, the image can contain artifacts or you may possibly experience jerky playback.

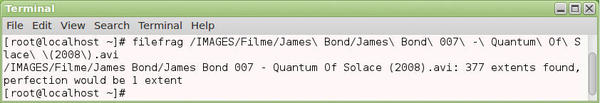

In such cases, it makes sense to check the consistency of the file. To do so, become root and run the command:

# filefrag <file>

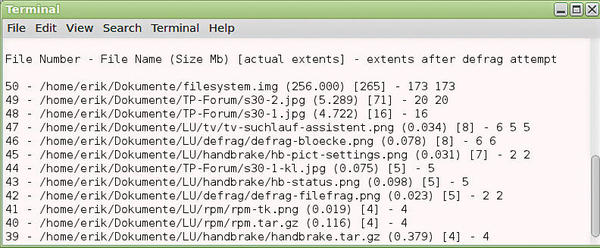

Filefrag then examines the file and returns more detailed information on its respective state (Figure 3). The number of extents, that is, non-contiguous file blocks, reveals the degree of fragmentation. The more extents the software determines, the worse the affected file is split.

Figure 3: The filesystem often breaks large multimedia files into smaller chunks, which it then stores in different places under certain circumstances.

Figure 3: The filesystem often breaks large multimedia files into smaller chunks, which it then stores in different places under certain circumstances.

Cleaning Up

There are different methods for reuniting the files: If you have plenty of time to spare, you can copy the data from the affected partition to another disk, delete the original data, and then restore the copied files to the original disk. This will ensure contiguous data areas.

The drawback with this method is the huge amount of time it takes for large data collections – especially if you use mass storage with a USB 2.0 interface as a backup medium.

Because the degree of fragmentation of a hard disk on Linux is often in the single-digit percentage range, even after a few years’ use, the developer community has not paid much attention to data maintenance in the past. Only a few command-line programs deal with this problem – and of these, only one impressed in our lab: defragfs.

To begin, unpack the small tool in a folder. Despite its small size of just under 9KB, the Perl script provides amazing functionality: It doesn’t just defrag directories and partitions, it also provides detailed information on the success of the actions.

To avoid having to specify the complete path when you call the tool, copy the program to the /usr/local/bin folder. Then, launch the tool with administrative privileges using the command:

# defragfs <directoryname> -a

Defragfs now automatically determines the appropriate values and merges the individual files. Because the script copies the files back and forth, make sure you have enough free space in the directory in question.

If you want to control the way the software works, run Defragfs with no parameters at the end of the command line. In this case, Defragfs prompts you multiple times for input. One criticism about the tool, which I observed in our lab, was that the tool jumps back to the beginning of the routine after completing its work. In this case, quit the program after it displays the statistics for the modified files by pressing Ctrl+C.

Success

Defragfs outputs a list with the merged segments of a file. For each file, it shows you in square brackets the number of fragments before copying and, at the end of each line, the number of blocks after the run. In Figure 4, you can see that large files of more than 100MB, especially, have significantly fewer segments after running Defragfs.

Conclusions

Ext2 and its successors are so robust by design that they need virtually no manual intervention. Nevertheless, if you are a power user with a soft spot for multimedia or you operate a server with large data transfers, you will want to check your hard drives from time to time.

The only tool that worked reliably on the ext2 filesystem in our lab, was Defragfs. This program works with no problem on mounted disks. The only shortcoming is the amount of time it needs to saves all the file in free areas –as in manual copying – and then restore them. However, Defragfs often rewards you for your patience with better disk performance.

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

The Next Linux Kernel Turns 7.0

Linus Torvalds has announced that after Linux kernel 6.19, we'll finally reach the 7.0 iteration stage.

-

Linux From Scratch Drops SysVinit Support

LFS will no longer support SysVinit.

-

LibreOffice 26.2 Now Available

With new features, improvements, and bug fixes, LibreOffice 26.2 delivers a modern, polished office suite without compromise.

-

Linux Kernel Project Releases Project Continuity Document

What happens to Linux when there's no Linus? It's a question many of us have asked over the years, and it seems it's also on the minds of the Linux kernel project.

-

Mecha Systems Introduces Linux Handheld

Mecha Systems has revealed its Mecha Comet, a new handheld computer powered by – you guessed it – Linux.

-

MX Linux 25.1 Features Dual Init System ISO

The latest release of MX Linux caters to lovers of two different init systems and even offers instructions on how to transition.

-

Photoshop on Linux?

A developer has patched Wine so that it'll run specific versions of Photoshop that depend on Adobe Creative Cloud.

-

Linux Mint 22.3 Now Available with New Tools

Linux Mint 22.3 has been released with a pair of new tools for system admins and some pretty cool new features.

-

New Linux Malware Targets Cloud-Based Linux Installations

VoidLink, a new Linux malware, should be of real concern because of its stealth and customization.

-

Say Goodbye to Middle-Mouse Paste

Both Gnome and Firefox have proposed getting rid of a long-time favorite Linux feature.