Exploring the power of generative adversarial networks

The Art of Forgery

© Lead Image © Aliaksandr-Marko, 123RF.com

Auction houses are selling AI-based artwork that looks like it came from the grand masters. The Internet is peppered with photos of people who don't exist, and the movie industry dreams of resurrecting dead stars. Enter the world of generative adversarial networks.

Machine learning models that could recognize objects in images – and even create entirely new images – were once no more than a pipe dream. Although the AI world discussed various strategies, a satisfactory solution proved illusive. Then in 2014, after an animated discussion in a Montreal bar, Ian Goodfellow came up with a bright idea.

At a fellow student's doctoral party, Goodfellow and his colleagues were discussing a project that involved mathematically determining everything that makes up a photograph. Their idea was to feed this information into a machine so that it could create its own images. At first, Goodfellow declared that it would never work. After all, there were too many parameters to consider, and it would be hard to include them all. But back home, the problem was still on Goodfellow's mind, and he actually found the solution that same night: Neural networks could teach a computer to create realistic photos.

His plan required two networks, the generator and the discriminator, interacting as counterparts. The best way to understand this idea is to consider an analogy. On one side is an art forger (generator). The art forger wants to, say, paint a picture in the style of Vincent van Gogh in order to sell it as an original to an auction house. On the other hand, an art detective and a real van Gogh connoisseur at the auction house try to identify forgeries. At first, the art expert is quite inexperienced, but the detective immediately recognizes that it is not a real van Gogh. Nevertheless, the counterfeiter does not even think of giving up. The forger keep practicing and trying to foist new and better paintings off on the detective. In each round, the painting looks more like an original by a famous painter, until the detective finally classifies it as genuine.

This story clearly describes the idea behind GANs. Two neural networks – a generator and a discriminator – play against each other and learn from each other. Initially, the generator receives a random signal and generates an image from it.Combined with instances of the training dataset (real images), this output forms the input for the second network, the discriminator. Then the discriminator assigns the image to either the training dataset or the generator and receives information on whether or not it was correct. Through back propagation, the discriminator's classification then returns a signal to the generator, which uses this feedback to adjust its output accordingly.

The game carries on in as many iterations as it takes for both networks to have learned enough from each other for the discriminator to no longer recognize where the final image came from. The generator part of a GAN learns to generate fake data by following the discriminator's feedback. By doing so, the generator convinces the discriminator to classify the generator's output as genuine.

From AI to Art

One of the best-known examples of what GANs are capable of in practical terms is the painting "Portrait of Edmond Belamy" [1] from the collection "La Famille de Belamy" (Figure 1). Auctioned at Christie's for $432,500 in 2018, the artwork is signed by the algorithm that created it. This shows what GANs are backed up by in terms of mathematics and game theory: the minimax strategy. The algorithm is used to determine the optimal game strategy for finite two-person zero-sum games such as checkers, nine men's morris, or chess. With its help and a training dataset of 15,000 classical portraits, the Parisian artist collective Obvious generated the likeness of Edmond Belamy, as well as those of his relatives [2].

Figure 1: The Paris-based Obvious collective, which is made up of AI experts and artists exploring the creative potential of artificial intelligence, used a GAN to generate this painting (Source: Obvious).

Figure 1: The Paris-based Obvious collective, which is made up of AI experts and artists exploring the creative potential of artificial intelligence, used a GAN to generate this painting (Source: Obvious).

Today, AI works of art are flooding art markets and the Internet. In addition, you will find websites and apps that allow users to generate their own artificial works in numerous styles using keywords or uploaded images. Figure 2, for example, comes from NightCafÈ [3] and demonstrates what AI makes of the buzzword "time machine" after three training runs. Users can achieve quite respectable results in a short time, as Figure 3 shows. But if you want to refine your image down to the smallest detail, you have to buy credit points.

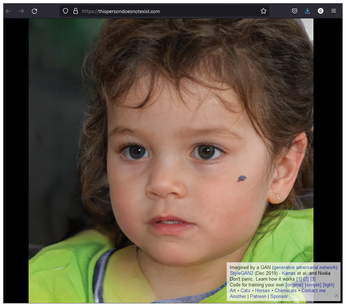

GANs don't just mimic brushstrokes. Among other things, they create extremely authentic photos of people. The website thispersondoesnotexist.com [4] provides some impressive examples. The site is backed by AI developer Phillip Wang and NVIDIA's StyleGAN [5]. On each refresh, StyleGAN generates a new, almost frighteningly realistic image of a person who is completely fictitious (Figure 4). Right off the bat, it's very difficult to identify the image as a fake.

Figure 4: NVIDIA's StyleGAN delivers such good results that viewers often can't tell if it's a real person or not (Source: thispersondoesnotexist.com).

Figure 4: NVIDIA's StyleGAN delivers such good results that viewers often can't tell if it's a real person or not (Source: thispersondoesnotexist.com).

Jevin West and Carl Bergstrom of the University of Washington, as part of the Calling Bullshit Project, at least give a few hints on their website at whichfaceisreal.com that can help users distinguish fakes [6]. There are, for example, errors in the images that look like water stains and clearly identify a photo as generated by StyleGAN, or details of the hairline or the earlobes that do not really match. Sometimes irregularities appear in the background, too. The AI doesn't really care about the background because it is trained to create faces.

GANs and Moving Images

Faces are also the subject in another still fairly unexplored application for GANs, the movie industry. Experts have long recognized the potential of GAN technology for film. It is used, for example, to correct problematic blips in lip-synched series or movies. The actors' facial expressions and lip movements often do not match the dialog spoken in another language, and the audience finds this dissonance distracting.

GANs and deep fakes solve the problem. Deep fakes replace the facial expressions and lip movements from the original recording. To create deep fakes, application developers need to feed movies or series in a specific language into training datasets. The GAN can then mime new movements on the actors' faces to match the lip-synched speech.

But this is just a small teaser of what GANs could do in the context of movies. In various projects, researchers are working on resurrecting the deceased through AI. For example, developers at MIT resurrected Richard Nixon in 2020, letting him bemoan a failed moon mission in a fake speech to the nation [7]. The same method could theoretically be applied to long-deceased Hollywood celebrities.

GANTheftAuto

The traditional way to develop computer games is to cast them in countless lines of code. Programming simple variants does not pose any special challenges for AI. A set of training data and NVIDIA's GameGAN generator[8], for example, is all you need for a fully interactive game world to emerge at the end. The Pacman version by an NVIDIA AI, or an Intel model that can be used to implement far more realistic scenes in video games demonstrate how far the technology has advanced.

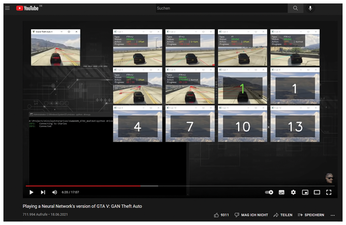

However, this by no means marks the limits of what is possible. In 2021, AI developers Harrison Kinsley and Daniel Kukiela reached the next level with GANTheftAuto [9] (Figure 5). With the help of GameGAN, they managed to generate a playable demo version of the 3D game Grand Theft Auto (GTA) V. To do this, the AI – as in NVIDIA's Pacman project – has to do exactly one thing: play, play, and play again.

Figure 5: By running through a road section in GTA V over and over again, the AI generates a visually realistic demo (Source: YouTube).

Figure 5: By running through a road section in GTA V over and over again, the AI generates a visually realistic demo (Source: YouTube).

Admittedly, the action-adventure classic game is far more complex with its racing and third-person shooter influences. The training overhead increases massively with this complexity, which is why Kinsley and Kukiela initially concentrated on a single street. They had their AI run the course over and over again in numerous iterations, collecting the training material itself. While doing so, GameGAN learned to distinguish between the car and the environment.

The bottom line: GANTheftAuto is still far removed from the graphical precision of full-fledged video games, but it is worth watching and likely to be trendsetting. At least the AI managed to correctly copy details such as the reflection of sunlight in the rear window or the shadows cast by the car from GTA V. And it reproduced them correctly, as Kinsley explains in a demo video on YouTube [10].

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Linux Foundation Reports that Open Source Delivers Better ROI

In a report that may surprise no one in the Linux community, the Linux Foundation found that businesses are finding a 5X return on investment with open source software.

-

Keep Android Open

Google has announced that, soon, anyone looking to develop Android apps will have to first register centrally with Google.

-

Kernel 7.0 Now in Testing

Linus Torvalds has announced the first Release Candidate (RC) for the 7.x kernel is available for those who want to test it.

-

Introducing matrixOS, an Immutable Gentoo-Based Linux Distro

It was only a matter of time before a developer decided one of the most challenging Linux distributions needed to be immutable.

-

Chaos Comes to KDE in KaOS

KaOS devs are making a major change to the distribution, and it all comes down to one system.

-

New Linux Botnet Discovered

The SSHStalker botnet uses IRC C2 to control systems via legacy Linux kernel exploits.

-

The Next Linux Kernel Turns 7.0

Linus Torvalds has announced that after Linux kernel 6.19, we'll finally reach the 7.0 iteration stage.

-

Linux From Scratch Drops SysVinit Support

LFS will no longer support SysVinit.

-

LibreOffice 26.2 Now Available

With new features, improvements, and bug fixes, LibreOffice 26.2 delivers a modern, polished office suite without compromise.

-

Linux Kernel Project Releases Project Continuity Document

What happens to Linux when there's no Linus? It's a question many of us have asked over the years, and it seems it's also on the minds of the Linux kernel project.