In statistical computations, intuition can be very misleading

Guess Again

Even hardened scientists can make mistakes when interpreting statistics. Mathematical experiments can give you the right ideas to prevent this from happening, and quick simulations in Perl nicely illustrate and support the learning process.

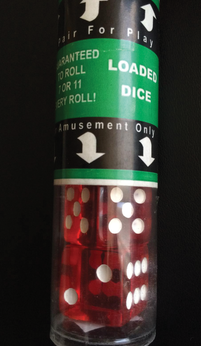

If you hand somebody a die in a game of Ludo [1], and they throw a one on each of their first three turns, they are likely to become suspicious and check the sides of the die. That's just relying on intuition – but when can you scientifically demonstrate that the dice are loaded (Figure 1)? After five throws that all come up as ones? After ten throws?

Each experiment with dice is a game of probabilities. What exactly happens is a product of chance. It is not so much the results of a single throw that are relevant, but the tendency. A player could throw a one, three times in succession from pure bad luck. Although the odds are pretty low, it still happens, and you would be ill advised to jump to conclusions about the dice based on such a small number of attempts.

[...]

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

The Next Linux Kernel Turns 7.0

Linus Torvalds has announced that after Linux kernel 6.19, we'll finally reach the 7.0 iteration stage.

-

Linux From Scratch Drops SysVinit Support

LFS will no longer support SysVinit.

-

LibreOffice 26.2 Now Available

With new features, improvements, and bug fixes, LibreOffice 26.2 delivers a modern, polished office suite without compromise.

-

Linux Kernel Project Releases Project Continuity Document

What happens to Linux when there's no Linus? It's a question many of us have asked over the years, and it seems it's also on the minds of the Linux kernel project.

-

Mecha Systems Introduces Linux Handheld

Mecha Systems has revealed its Mecha Comet, a new handheld computer powered by – you guessed it – Linux.

-

MX Linux 25.1 Features Dual Init System ISO

The latest release of MX Linux caters to lovers of two different init systems and even offers instructions on how to transition.

-

Photoshop on Linux?

A developer has patched Wine so that it'll run specific versions of Photoshop that depend on Adobe Creative Cloud.

-

Linux Mint 22.3 Now Available with New Tools

Linux Mint 22.3 has been released with a pair of new tools for system admins and some pretty cool new features.

-

New Linux Malware Targets Cloud-Based Linux Installations

VoidLink, a new Linux malware, should be of real concern because of its stealth and customization.

-

Say Goodbye to Middle-Mouse Paste

Both Gnome and Firefox have proposed getting rid of a long-time favorite Linux feature.