Cloud Backup with Duplicity

Data Safe

ByIf you’re looking for a secure and portable backup technique, try combining the trusty command-line utility Duplicity with an available cloud account.

The Duplicity command-line tool is a popular option for storing backups in insecure environments, because it offers encryption by default. Encrypted backups can fall into the hands of third parties without risk. Many users configure Duplicity to back up to a local file server, but you can also send your files to an FTP server, an SSH server, or even a cloud-based Amazon (S3) or Ubuntu (U1) system. Backing up to the cloud offers the protection of a remote location, and it makes the archive available worldwide. In this article, I describe how to use Duplicity to back up files to the cloud.

About Duplicity

When launched, Duplicity first generates a full backup; later backups store incremental changes, called deltas, in what Duplicity refers to as volumes. Space-saving hard links then refer to the files on the full backup.

Thus, in principle, you could just create one full backup and then use incremental backups for the changes. The developers of Duplicity warn customers, however: Not only can a mistake in one incremental part ruin the entire backup, but restoring files takes quite a long time if the software needs to run through all the incremental backups.

If you prefer a GUI-based approach, Déjà Dup provides a graphical interface for Duplicity that is very easy to use and covers many essential functions. It is part of Gnome and is available in distributions such as Ubuntu and Fedora, but it cannot be used on systems without a graphical interface. Other command-line tools, such as Duply, Backupninja, and Dupinanny, also aim to simplify the handling of Duplicity.

Turnkey

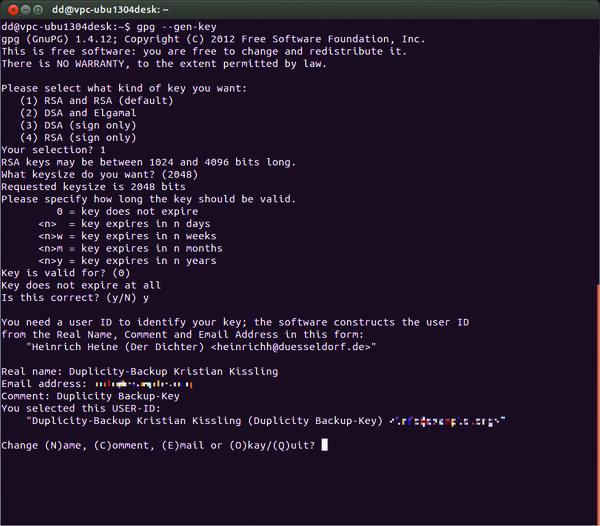

If you do not have your own GPG key, or if you want to create one for your backups, you can do so by running

gpg --gen-key

(Figure 1).

In the commentary, you can store the purpose of the key, if desired; you will need the passphrase later when creating and decrypting encrypted backups. Additionally, you can sign your archives using

gpg --sign-key <key-id>

to create a digital signature.

The passphrase protects the key in case it falls into the wrong hands. To avoid losing access to your data, you need to keep it in a safe place. External media, such as USB sticks, can be useful places to save the public and secret key.

The gpg -k and gpg -K commands display the matching public key. Typing

$ gpg --output /media/<user>/<USB-Stick>/backup key_pub.gpg \ --armor --export <key-ID>$ gpg \ --output /media/<user>/<USB-Stick>/backupkey_sec.gpg \ --armor --export-secret-keys <key-ID>

exports both keys. Typing

gpg --import

installs the master keys on another system.

Four Goals

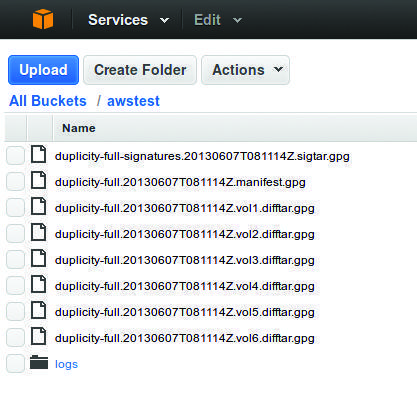

The script in Listing 1 creates encrypted backups of home directories for four different targets: a local directory, an SSH server (via the Paramiko back end), Ubuntu One, and Amazon’s S3 (Figure 2).

Figure 2: If you create a bucket in Amazon’s S3 cloud storage, you can store encrypted backups with Duplicity.

Figure 2: If you create a bucket in Amazon’s S3 cloud storage, you can store encrypted backups with Duplicity.

Listing 1: backup.sh

01 #!/bin/bash

02 # Passphrase for GPG key

03 export PASSPHRASE=<Passphrase>

04

05 # Key ID of the GPG key

06 export GPG_KEY=<key-ID>

07

08 # Amazon access credentials

09 export AWS_ACCESS_KEY_ID=<Key-ID>

10 export AWS_SECRET_ACCESS_KEY=<secret key>

11

12 # Backup source

13 SOURCE=/home

14

15 # Backup targets

16 # Local backup

17 LOCAL_BACKUP=file:///home/user/backup

18 # Backup via SSH

19 SSH_BACKUP=sftp://user@remote/backup

20 # Backup to Ubuntu One

21 UBUNTU_ONE=u1+http://<target_folder>

22 # Amazon S3

23 AMAZON_S3=s3+http://<Bucket-Name>

24

25 # Duplicity commands

26 # Local

27 duplicity --encrypt-key ${GPG_KEY} ${SOURCE} ${LOCAL_BACKUP}

28 # SSH

29 export FTP_PASSWORD=<SSH-Password>

30 duplicity --encrypt-key ${GPG_KEY} ${SOURCE} ${SSH_BACKUP}

31 unset FTP_PASSWORD

32 # Ubuntu One

33 duplicity --encrypt-key ${GPG_KEY} ${SOURCE} ${UBUNTU_ONE}

34 # Amazon S3

35 duplicity --encrypt-key ${GPG_KEY} --s3-use-new-style --s3-european-buckets ${SOURCE} ${AMAZON_S3}

36

37 # Reset Passwords

38 unset AWS_ACCESS_KEY_ID

39 unset AWS_SECRET_ACCESS_KEY

40 unset PASSPHRASEIn this case, you need to keep several points in mind. Duplicity expects absolute paths with local backups; however, if the backup destination is an SSH server, the hostname is followed by a relative path to the home directory of the logged-in user – in Listing 1, the backup thus ends up in /home/user/backup.

Safe in the Cloud

If you want to use Ubuntu One, you need an active session of X to use Duplicity, because a window appears with the password prompt. This option is thus primarily of interest to users of the Déjà Dup graphical interface. Ubuntu One sets a target folder relative to the user’s home directory for the path specified in Duplicity.

If you use Amazon’s S3 cloud storage (again, a free usage tier is available), you first need to create a user account, wait for your credentials, and create what is known as a bucket. Duplicity requires the name of this bucket as a path. If it is on Amazon’s European availability zone (AZ), Duplicity also expects the parameters --s3-use-new-style and --s3-european-buckets.

To test the backup mechanism first, you can set the -v8 and -v9 switches to elicit more detailed information from Duplicity. If you will be using an SSH server or Ubuntu One for your backups, you also need the python-paramiko package; users of S3 additionally need to install python-boto.

The passwords are passed in as environment variables in Listing 1 and deleted again after the process. Duplicity expects FTP and SSH passwords in the FTP_PASSWORD variable. If you prefer an unencrypted backup, you can use the --no-encryption parameter.

Fine Tuning

If you do not want to back up all the home directories (Listing 1), you can exclude files from the backup. The parameters --include=${SOURCE}/carl, --include=${SOURCE}/lenny, and --exclude='**' ensure that only the home directories belonging to carl and Lenny are backed up.

Duplicity also saves files in the form of volumes with a default size of 26MB. If your backup is somewhere in the gigabyte range, this action will quickly produce an impressive collection of files. You might want to change the volume size to approximately 250MB using --volsize 250, but you should use this option with caution because it requires a huge amount of main memory.

Check It Out

In the next step, Duplicity checks to see whether the signatures of the backed up files match those of the originals. If they do not, the software displays an error message. You can evaluate this with the script here (-v increases the verbosity level of verify):

$ duplicity verify -v4 --s3-use-new-style --s3-european-buckets \

--encrypt-key ${GPG_KEY} ${AMAZON_S3} ${SOURCE}The Duplicity user does not need to enter a GPG password here because the software typically retrieves it from .cache/duplicity. You can output the report to a file (--log-file), which can be mailed to you or sent by sync software in the event of an irregularity. Thus, the backup creator is always notified if a backup fails and can then take any necessary steps.

At this point, you might also consider setting up a routine that regularly checks the free or used space, or both, on the external storage and reports bottlenecks.

If you want to retrieve a specific file from the backup, you can view all of the files from the last backup:

$ duplicity list-current-files --s3-use-new-style \

--s3-european-buckets \

--encrypt-key ${GPG_KEY} {AMAZON_S3}If you want the list to show you the state at a specific point in time, you can set the --time parameter, which expects a time in date-time format (e.g., 1997-07-16T19:20:30+01:00) or as YYYY/MM/DD.

This parameter comes into play when restoring files:

$ duplicity --time 2013/06/08 --s3-use-new-style \

--s3-european-buckets \

--file-to-restore <path/to/sourcefile> \

${AMAZON_S3} <path/to/targetfolder>/<sourcefile>Note that the --time parameter must come before the restore command. In addition to the recovery path, you must define the name of the source file; the first path refers to the source file in the Amazon bucket.

Backup Cycles

Because full backups take up so much space, you should consider in advance how often you want to do this. Your setup will depend on how often the data changes and how much space is available, among other things.

The option --full-if-older-than 1M tells Duplicity to create a full backup if the last full backup back is already more than a month old. This step removes the need to write complicated scripts or several cronjobs to switch between full and incremental backups.

For an automated backup, the commands in Listing 1 and a verification routine can be built into a script that a cronjob runs on a regular basis, such as:

01 2 * * * </path/to/backup-script> >> </path/to/logfile>

If the backup computer is not permanently up, you can use Anacron, which completes the necessary backups even if the computer was not running at the scheduled time.

To delete old backups without manual intervention, you can easily adjust Listing 1. The command

$ duplicity --s3-use-new-style --s3-european-buckets \

--encrypt-key ${GPG_KEY} remove-older-than 6M --force ${AMAZON_S3}automatically deletes any backups from Amazon S3 storage that are more than six months old.

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Introducing matrixOS, an Immutable Gentoo-Based Linux Distro

It was only a matter of time before a developer decided one of the most challenging Linux distributions needed to be immutable.

-

Chaos Comes to KDE in KaOS

KaOS devs are making a major change to the distribution, and it all comes down to one system.

-

New Linux Botnet Discovered

The SSHStalker botnet uses IRC C2 to control systems via legacy Linux kernel exploits.

-

The Next Linux Kernel Turns 7.0

Linus Torvalds has announced that after Linux kernel 6.19, we'll finally reach the 7.0 iteration stage.

-

Linux From Scratch Drops SysVinit Support

LFS will no longer support SysVinit.

-

LibreOffice 26.2 Now Available

With new features, improvements, and bug fixes, LibreOffice 26.2 delivers a modern, polished office suite without compromise.

-

Linux Kernel Project Releases Project Continuity Document

What happens to Linux when there's no Linus? It's a question many of us have asked over the years, and it seems it's also on the minds of the Linux kernel project.

-

Mecha Systems Introduces Linux Handheld

Mecha Systems has revealed its Mecha Comet, a new handheld computer powered by – you guessed it – Linux.

-

MX Linux 25.1 Features Dual Init System ISO

The latest release of MX Linux caters to lovers of two different init systems and even offers instructions on how to transition.

-

Photoshop on Linux?

A developer has patched Wine so that it'll run specific versions of Photoshop that depend on Adobe Creative Cloud.