Backdoors in Machine Learning Models

Interest in machine learning has grown incredibly quickly over the past 20 years due to major advances in speech recognition and automatic text translation. Recent developments (such as generating text and images, as well as solving mathematical problems) have shown the potential of learning systems. Because of these advances, machine learning is also increasingly used in safety-critical applications. In autonomous driving, for example, or in access systems that evaluate biometric characteristics. Machine learning is never error-free, however, and wrong decisions can sometimes lead to life-threatening situations. The limitations of machine learning are very well known and are usually taken into account when developing and integrating machine learning models. For a long time, however, less attention has been paid to what happens when someone tries to manipulate the model intentionally.

Adversarial Examples

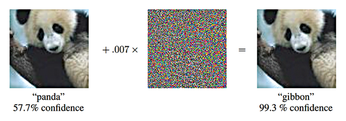

Experts have raised the alarm about the possibility of adversarial examples [1] – specifically manipulated images that can fool even state-of-the-art image recognition systems (Figure 1). In the most dangerous case, people cannot even perceive a difference between the adversarial example and the original image from which it was computed. The model correctly identifies the original, but it fails to correctly classify the adversial example. Even the category in which you want the adversial example to be erroneously classified can be predetermined. Developments [2] in adversarial examples have shown that you can also manipulate the texture of objects in our reality such that a model misclassifies the manipulated objects – even when viewed from different directions and distances.

Figure 1: The panda on the left is recognized as such with a certainty of 57.7 percent. Adding a certain amount of noise (center) creates the adversarial example. Now the animal is classified as a gibbon [3]. © arXiv preprint arXiv:1412.6572 (2014)

Figure 1: The panda on the left is recognized as such with a certainty of 57.7 percent. Adding a certain amount of noise (center) creates the adversarial example. Now the animal is classified as a gibbon [3]. © arXiv preprint arXiv:1412.6572 (2014)

[...]

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Two New Distros Adopt Enlightenment

MX Moksha and AV Linux 25 join ranks with Bodhi Linux and embrace the Enlightenment desktop.

-

Solus Linux 4.8 Removes Python 2

Solus Linux 4.8 has been released with the latest Linux kernel, updated desktops, and a key removal.

-

Zorin OS 18 Hits over a Million Downloads

If you doubt Linux isn't gaining popularity, you only have to look at Zorin OS's download numbers.

-

TUXEDO Computers Scraps Snapdragon X1E-Based Laptop

Due to issues with a Snapdragon CPU, TUXEDO Computers has cancelled its plans to release a laptop based on this elite hardware.

-

Debian Unleashes Debian Libre Live

Debian Libre Live keeps your machine free of proprietary software.

-

Valve Announces Pending Release of Steam Machine

Shout it to the heavens: Steam Machine, powered by Linux, is set to arrive in 2026.

-

Happy Birthday, ADMIN Magazine!

ADMIN is celebrating its 15th anniversary with issue #90.

-

Another Linux Malware Discovered

Russian hackers use Hyper-V to hide malware within Linux virtual machines.

-

TUXEDO Computers Announces a New InfinityBook

TUXEDO Computers is at it again with a new InfinityBook that will meet your professional and gaming needs.

-

SUSE Dives into the Agentic AI Pool

SUSE becomes the first open source company to adopt agentic AI with SUSE Enterprise Linux 16.